Hyung Won Chung Twitter On "new Paper + Models! N N We Extend

Hyung won chung 现在是 openai 的一名研究科学家。研究重点是大型语言模型。在那之前,他在谷歌大脑工作,并在 mit 攻读博士学位。 他曾参与过一些重要项目的研究工作,比如 5400 亿参数的大型语言模型 palm 和 1760 亿参数的开放式多语言模型 bloom。 其中关键,openai研究科学家、o1核心贡献者hyung won chung,刚刚就此分享了他在mit的一次演讲。 演讲主题为“don’t teach. Hyung won chung, dan garrette, kiat chuan tan, and jason riesa.

Hyung Won Chung on Twitter "New paper + models! N N We extend

An invited lecture i gave at stanford with my colleague hyung won chung is available on youtube. In this lecture at stanford university , he tells a story that there is a single dominant driving force Twitter / cv / google scholar / email.

Hyung won chung, le hou, shayne longpre, barret zoph, yi tay, william fedus, yunxuan li, xuezhi wang, mostafa dehghani

机器之心报道选自 ragntune 的博客编辑:panda从涌现和扩展律到指令微调和 rlhf,openai 科学家带你进入 llm 的世界。近日,openai 研究科学家 hyung won chung Find hyung won chung's articles, email address, contact information, twitter and more 最近在openai o1发布以后,openai的研究科学家hyung won chung 放出了去年他在 在mit的一次演讲,当时他正在思考范式的转变 这次演讲的标题定为“别教,要激励”。我们无法枚举我们希望从通用人工智能 (agi) 系统中获得的每一项技能,因为它们太多了。 Cs25 has become one of stanford's hottest and most seminar courses, featuring top researchers at the forefront of transformers research such as geoffrey hinton, ashish vaswani, and andrej karpathy.

Our class has an incredibly popular reception within and outside stanford, and millions of total views on youtube. Each week, we dive into the latest breakthroughs in ai, from large language models Scaling language modeling with pathways. Aakanksha chowdhery, sharan narang, jacob devlin, maarten bosma, gaurav mishra, adam roberts, paul barham, hyung won

Hyung won chung, noah constant, xavier garcia, adam roberts, yi tay, sharan narang, orhan firat:

Corr abs/2304.09151 ( 2023 ) Hyung won chung 是一位专攻大型语言模型的研究者,博士毕业于麻省理工学院,之后曾在谷歌大脑工作过三年多时间,于今年二月份加入 openai。 他曾参与过一些重要项目的研究工作,比如 5400 亿参数的大型语言模型 palm 和 1760 亿参数的开放式多语言语言模型 bloom Instead of your papers, i’d love to learn about the most difficult technical problem you worked on and your lessons. It doesn’t have to be ml.

I value exceptional technical skill a lot more than… / twitter. We are hiring in the chatgpt team! Happy to chat about this position. The latest posts from @hwchung27

It does so by directly controlling the number of epochs of each language.

Pretraining often operates with a “token budget”. View hyung won chung’s profile on linkedin, a professional community of 1 billion members. Massachusetts institute of technology · location: I am a research scientist at openai.

My research focuses on large language models. Prior to that, i was at google brain and did my phd at mit. Email / google scholar / twitter / github Verified email at google.com j swaminathan, hw chung, dm warsinger, fa almarzooqi, ha arafat.

Journal of membrane science 502,

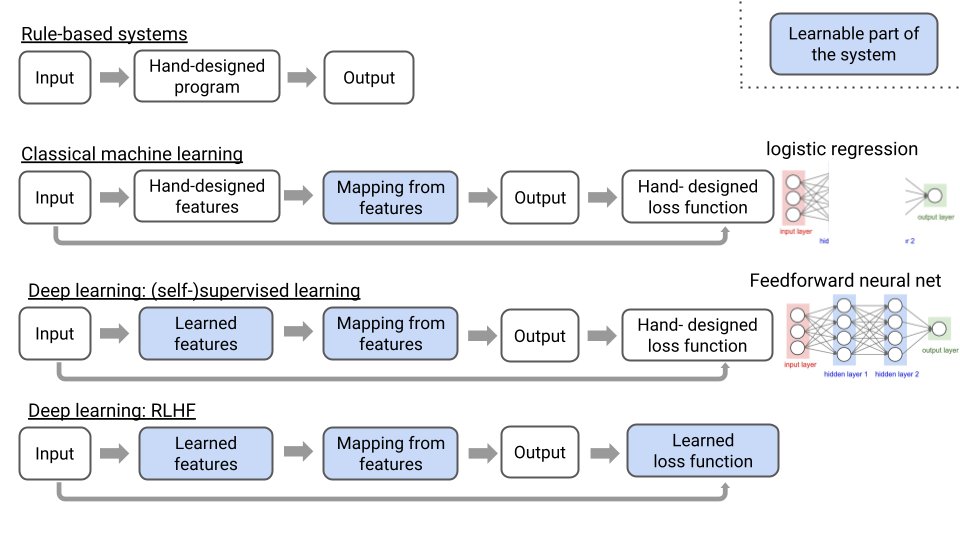

Mit ei seminar, hyung won chung from openai. Incentivize. i am a research scientist at openai, working on chatgpt.my research focuses on large language models (llms). 郑炯元(hyung won chung) 这是我的图示,展示了相同的概念。x 轴表示计算量(计算或数据),y 轴表示性能(某种智能指标)。这看起来像一幅卡通图。 郑炯元(hyung won chung) 我们可以采用两种方法,一种是结构更严格的,另一种是结构较宽松的。 Hyung won chung hwchung27 follow.

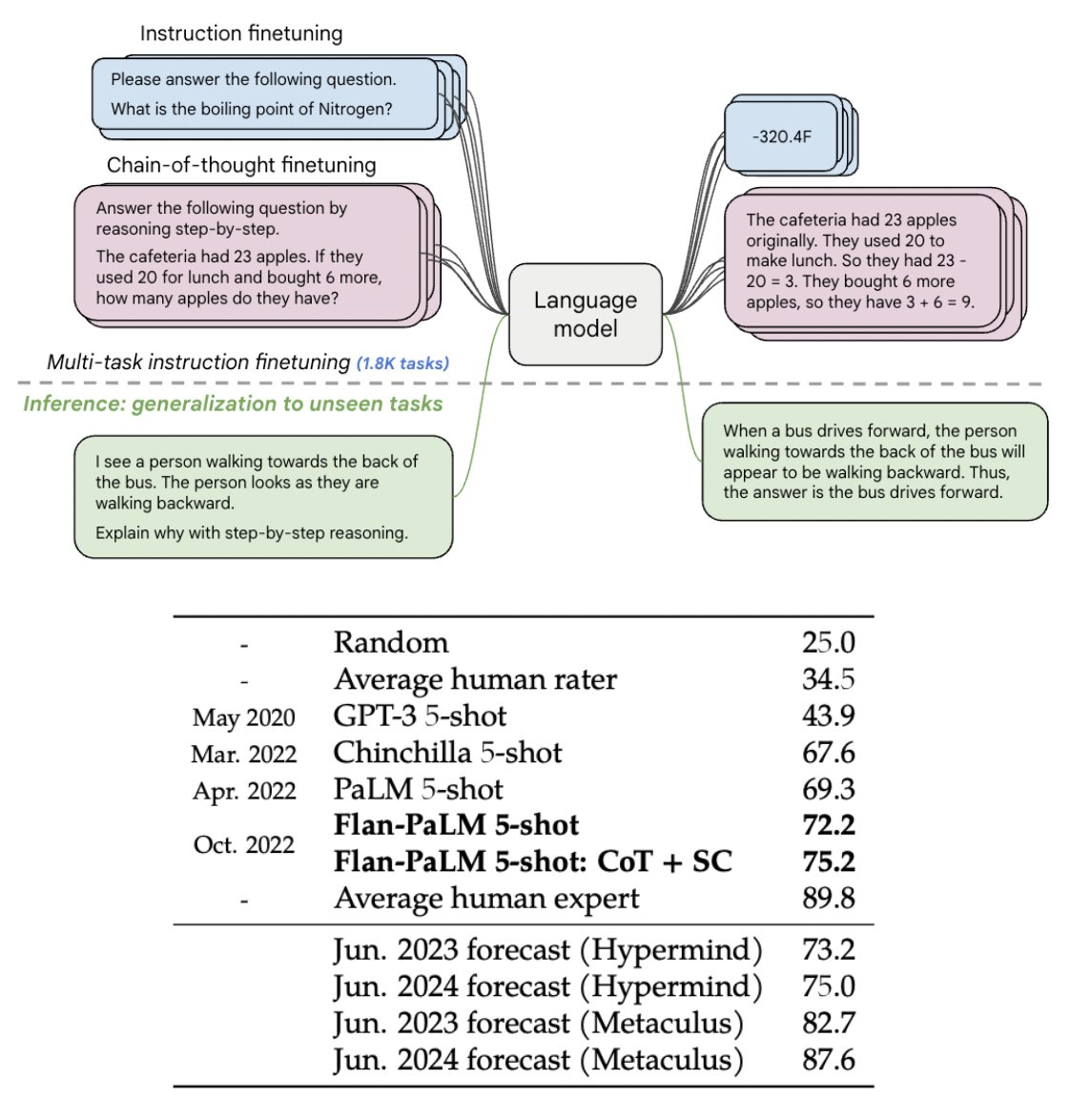

184 followers · 0 following @hwchung27; 其中关键,openai研究科学家、o1核心贡献者hyung won chung,刚刚就此分享了他在mit的一次演讲。 演讲主题为“don’t teach. 近日,openai 研究科学家 hyung won chung 在首尔国立大学做了题为「large language models (in 2023)」的演讲。在这次演讲中,他谈到了大型语言模型的涌现现象 In this lecture from stanford’s cs25 course, hyung won chung, a research scientist at openai, discusses the history and future of transformer architectures, focusing on the driving forces behind ai advancements.

The talk begins with an introduction to the rapid pace of ai development and the importance of studying changes in ai to predict

Hyung won chung spends significant time defining a story to tell even for technical talks.